Origins of the Cloud Computing Platform are closely linked to Service Oriented Architecture. In the cloud we think of everything as Service. These services have SLO’s ( Service Level Objective) very similar to SLA’s.

Why Failsafe Computing in Cloud?

With more and more organization increasing adopting the cloud platform, the perils of the same are many. We have seen amazon go down a couple of times in the 2012,2013 http://www.slashgear.com/amazon-com-is-down-its-not-just-you-update-back-in-business-31267671/ & Azure Outage http://www.zdnet.com/microsofts-december-azure-outage-what-went-wrong-7000010021/. The need for Failsafe Computing in Cloud is Now.

Failsafe Computing in Cloud is not an after thought like any other architecture discipline its one of the non functional requirement which has found its rightful place.

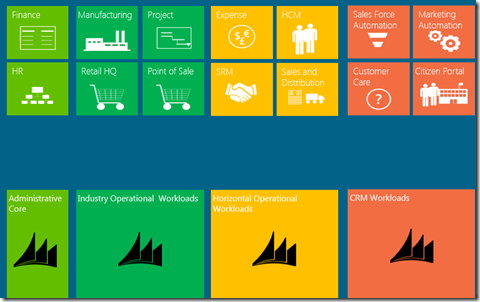

Any application build for cloud is structured around services. These services have workload associated with them. Services can be as generic as Sales Force Automation or Retail as an overarching services which can comprise of many other services to make it happen. The workload is a more broader concept example below.

What Failsafe Services really mean?

We architect the cloud platform on the guidelines of SOA, We define Service as basic unit of deployment of course it starts from conceptual architecture. For example a retail service is an independent functionality in the cloud going about doing its regular business. We’d expect this service to have defined SLO. The high level attributes of FailSafe Services are

- Software into Service: In the cloud platform everything is in term of Services. The delivery of cloud projects are in terms of services with defined SLO’s (availability …..)

- Services not Servers: In the cloud world we have our services deployed on logical vm and have the option of scale out. We no more think in terms of Servers.

- Decomposition by Workload:Cloud computing provides a layer of abstraction where the physical infrastructure, and even the appearance of physical infrastructure, has less of an impact on the overall application architecture. So instead of an application being required to run on a server, it can be decomposed into a set of loosely coupled services that have the freedom to run in the most appropriate fashion. This is the foundation of the workload model because what may be considered an appropriate way to run for one part of an application may be wildly different for another, hence the need to separate out the different parts of an application so that they can be dealt with separately. An example for workload is “Consider an example of an e-commerce application and the two distinct features of catalogue search and order placement. Even though these features are used by the same user (consumer) their underlying requirements differ. Ordering requires more data rigour and security, whereas search needs to optimally scale. A search being slightly incorrect is tolerable, whereas an incorrect order is not. In this example, the single use case of searching for and ordering a product can be decomposed into two different workloads, and a third if we count the back-end integration with the order fulfilment process.”

- Utilize Scale Units: Design by Scale Units is around how to define a capacity block for the Service. The model of the capacity of the block addresses unit of scalability, availability for that services. One may argue that adding more vm promotes elastic but on the contrary a scale unit could be a set of vm which can added or removed on the fly.

- Design for Operations: “Every services which runs on cloud has to satisfy some operational asks”. For example all services have to emit a basic level of telemetry like logging on health, issues, exception.

What does a service comprise of?

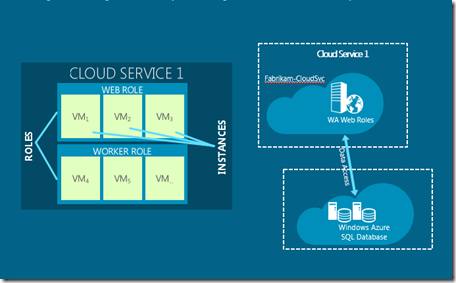

A service can comprise a number of web or worker role or persistent vm roles and storage (tables, queues, blob or sql azure) and is dependent on other services as well. The services can have inter or intra service dependencies.

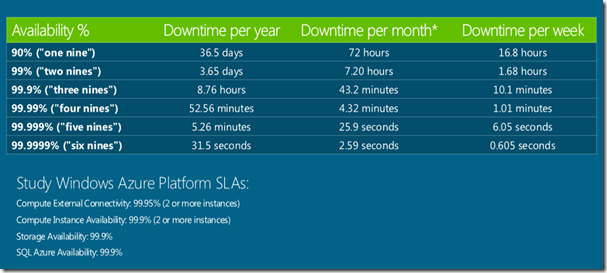

What are the SLA’s around services availability?

The 9’s around services are dependent on the cloud platform which 99.9, but there is strong dependence on the code which one writes in those services, for example dependency on external services. Below is what Azure Platform provides as a SLA.

What has throttling got to do with Services?

The services hosting in the cloud have to fulfil a certain request and run on the resources provided to it, there are chances when these resources run into been scarce or unavailable. One may end up using queues and also setting the Maximum number of messages beyond which it may not accept new messages. Throttling is a standard pattern noticed on shared resources. Throttling is an area which needs to be dealt at the time of architecture for ex: If Service A throttles after 5k request/sec use multiple accounts in your architecture. Another classical example is Facebook maintains a 99.99% avail but it has lot more constraints in the fine print like if you pound the site with over x request/second we will throttle you.

More on Decomposition by Workload?

Taking off where the earlier question on workload.

Decomposition is essentially an Architectural Pattern [POSA].

When architecting for the cloud, we don't create all of these decomposed services just because the platform allows it. After all, it does increase the complexity and require more effort to build. In the context of cloud computing, this architectural pattern has, amongst others, the following benefits:

- Availability — well-separated services create fault isolation zones, so that a failure of one part of the application will not bring everything down.

- Increased scalability — where parts of the application that require high scalability are not held back by those that do not.

- Continuous release and maintainability — different parts of the application can be upgraded without application-wide downtime.

- Operational responsiveness — operators can monitor, plan and respond better to different events.

The workload model requires that features be decomposed into discrete workloads that are identifiable, well named, and can be referenced. These workloads form the basis of the services that will deliver the required functionality. The workloads are also used in other ALM models to establish the architectural boundaries of services as they apply to specific models.

Decomposing Workloads

There are no easy rules for decomposing workloads which is why it should only be tackled by an experienced architect. An architect with little cloud computing experience will probably err on the side of not enough decomposition. The challenge is identifying the workloads for your particular application. Some are obvious, while others less so, and too much decomposition can create unnecessary complexity. Workloads can be decomposed by use case, features, data models, releases, security, and so on.

As the architect works through the functionality, some key workloads may become clear early on. For example:

- Separating the front-end workloads (where an immediate request response is required) can be easily distinguished from back-end workloads (where processing can be offloaded to an asynchronous process).

- Scheduled jobs, such as ETL, need to be kicked off at a particular time of day.

- Integration with legacy systems.

- Low load internal administrative features, such as account administration.

Indicators of differing workloads

Determining how to decompose workloads is the responsibility of the architect, and experienced architects should take to it quite easily. The following indicators of differing workloads are only a guide, as the particular application and environment may have differing indicators.

Feature roll-out

The separation of features into sets that are rolled-out over time are often indicators of separate workloads. For example, an e-commerce application may have the viewing of product browsing history in the first release, with viewing of product recommendations based on browsing history in a subsequent release. This indicates that product recommendations can be in a separate workload to simple browsing history.

Use case

A single user, in a single session, may access different features that appear seamless to the user but are separate use cases. The separate use cases may indicate separate workloads. For example, the primary use case on Twitter of viewing your timeline and tweeting is separate from the searching use case. Searching is a separate workload, which is implemented on Twitter as a completely separate service.

User wait times

Some features require that the service provides a quick response, while others have a longer time that the user is prepared to wait. For example, a user expects that items can be removed from a shopping basket immediately, but are prepared to wait for order confirmations to be e-mailed through. This difference in wait time indicates that there are separate workloads for basket features and order confirmation.

Model differences

The importance of workload decomposition in the design phase is because all other models that need to be developed in design (such as the data model, security model, operational model, and so on) are influenced by the various workloads. Using our e-commerce example, without identifying search and ordering as separate workloads, we would get stuck when developing the security model as we would either end up with too much security for search (which is essentially public data, and has low security) by lumping it together with the higher security requirements for orders, or the reverse, where we are exposed to hacking because orders are insecure.

In the process of working through the models, a clue that workloads are incorrectly defined is when a model doesn't seem to fit cleanly with the workload. This may indicate that there are two workloads that need to be separated out. Whilst it is better to clearly define the workloads early on, it is possible that some will emerge later in the design, or indeed as requirements change during development. The problem, of course, is that when new workloads are identified they need to be reviewed against models that have already been developed, as at least one model would have changed.

Below are some examples where a difference in a model indicates the possibility that the feature is composed of two different workloads:

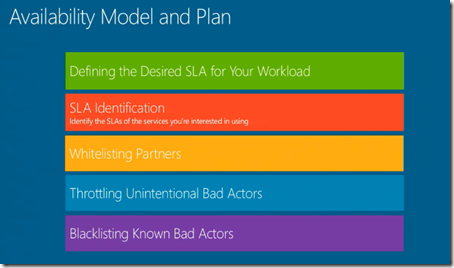

- Availability model — When developing the availability model, if one feature has higher availability requirements than another, then it may indicate that there are separate workloads. For example, the Twitter API (as used by all Twitter clients) needs to be far more available than search.

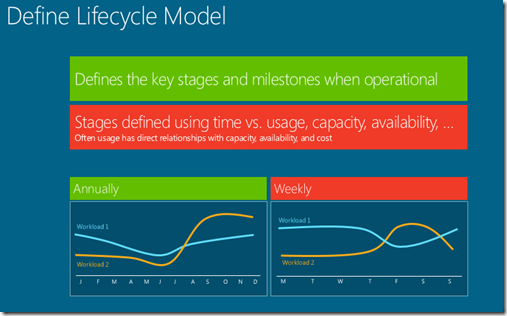

- Lifecycle model — The lifecycle model may show that a particular feature is subject to spiky traffic or high load. In order to be able to scale that feature, it should be in a separate workload to those that have flatter usage patterns. For example, hotel holiday bookings may be spiky because of promotions, seasons or other influences, but the reviewing of hotels by guests may be a lot flatter. So, hotel reviews may be in a separate workload.

- Data model — The data model separates data into schemas that may be based on workloads, so getting the workload model and the data model aligned is important. Features that use different data stores indicate possible workload separation. For example, the product catalogue may be in a search optimised data store, such as SOLR, whereas the rest of the application stores data in SQL. This may indicate that search is a distinct and separate workload.

- Security model — Features or data that have different security requirements can indicate separate workloads. For example, in question and answer applications the reading of questions may be public, but asking and answering questions requires a login. This may indicate that viewing and editing are separate workloads.

- Integration model — Different integration points often require separate workloads. While some integration may require immediate data, such as a stock availability lookup and will be in the same workload as other functionality, the overnight updating of stock on-hand may be a separate workload.

- Deployment model — Some functionality may be subject to frequent changes while others remain static, indicating the possibility of separate workloads. For example, the consumer-facing part of an application may update frequently as features are added and defects fixed, whereas the admin user interface stays unchanged for longer periods. The need to deploy one set of functionality without having to worry about others can be helped by separating the workloads.

Implementing workloads as services

Workloads are a logical construct, and the decision about what workloads to put into what services remains an implementation decision. Ultimately, many workloads will be grouped into the single services, but this should not impact the logical separation of the workloads. For example, the web application service may contain many front-end workloads because they work better together as a single service. Another example is the common pattern to have a single worker role processing messages from multiple queues, resulting in a number of workloads being handled by a single role.

The decision to group workloads together should happen late in the development cycle, after most of the ALM models have been completed, as the differences across models may be significant enough to warrant separate implemented services.

Identified workloads

The primary output of the workload model is a list of workloads, with some of their characteristics, so that they can be used and referenced in other ALM models. For each identified workload:

- Name the workload.

- Provide a contextual description of the workload. Bias the description towards the business requirement so that all stakeholders can understand it.

- Briefly highlight relevant technical aspects of the workload that may influence the model. For example, the workload may have special latency requirements, or need to interface with an external system. These aspects should be quick and easy to read through for all workloads when developing the models.

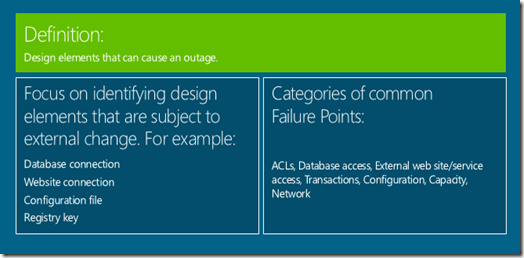

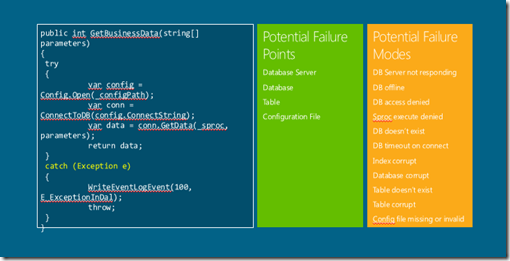

How do we look at failure points?

Most applications today by design handle failure points very inefficiently, what does this mean for example “one could go to an internet site referring back to e-commerce example trying to place an order and bang gets error message failed to connect to x order service with some cryptic stack trace.Most of errors written today are not meant for operations folks its for the developer. A failure point is place in the code which has an external dependency example opening a database connection, access a configuration file, so the typical error message the operation team would be expecting “this <action> open of this <artefact> database failed or did not work due this probable <reason> a timeout”. The other classical case the try and catch block where an exception gets thrown from the lowest most level to the highest level which itself is expensive without proper messaging.

Failure Mode Analysis(FMA)

A predictable root cause if the outage that occurs at a Failure Point. Failure Mode is the various condition can experienced on a Failure Point.

Failure Point is an external condition most of the time, failure modes identify the root cause of an outage of a failure point. The art which the developer needs to be vary of here “how much of the failure can be fixed by a simple retry or reported out”. The retry go far beyond just database connection it can service opening, connecting to the service bus etc…”.

Failure Mode Example

Failure Mode Modelling is as important as Threat Modelling and should be part of the overall project lifecycle.

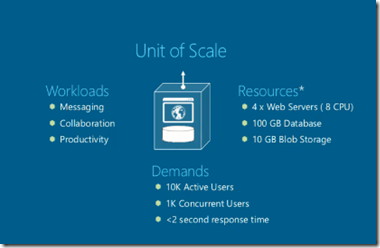

What is a Scale Unit in Cloud World?

Unit of Scale is associated with a service, a workload and is the null unit of deployment in case of a scale up or down. A Unit of Scale has the following

- Workloads – Messaging, Collaboration, Productivity.

- Resources- 4 – Web Roles ( 8 CPU)

- Storage : 100 GB Database, 10 GB Blob Storage

- Demands it can meet: 10k Active Users, 1K Concurrent Users, < 2 seconds response time.

Fault and Upgrade Domains.

The architecture or design is cloud strongest as its weakest component. A failed component can’t take down service. Make sure there are dual domain or “minimum of 2 instances”. Upgrade Domain is another areas. Both these areas are an inherent part of Windows Azure more information can be found here.

What are consideration one needs to give in Applications?

Following are the recommendations

- Default to asynchronous

- Handle Transient Faults

- Circuit Breaker Pattern: Services in cloud architecture generally have an avail of 99.99% , with 2 instances the avail can be increased further and adding up geo we can achieve much more.Throttling in case overhauling client calls or other failure condition requires the clients to write the code in such as manner where by retries to the service can happen in a safe manner i.e when the service is up. Developing enterprise-level applications, we often need to call external services and resources. One method of attempting to overcome a service failure is to queue requests and retry periodically. This allows us to continue processing requests until the service becomes available again. However, if a service is experiencing problems, hammering it with retry attempts will not help the service to recover, especially if it is under increased load. Such a pounding can cause even more damage and interruption to services. If we know there could potentially be a problem with a service, we can help take some of the strain by implementing a Circuit Breaker pattern on the client application.

- Automate All the Things

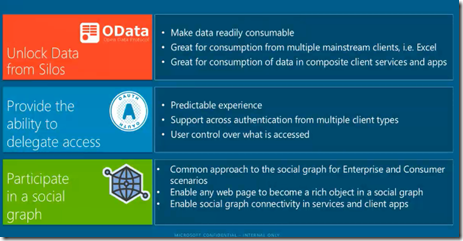

Embrace Open Standards – This is bit of Prescriptive Guidance which can help

- OData – Use OData as standard data protocol

- OAuth- Identity standards

- Open Graph-

These standards are discussed in a Fail Safe because there is no need to reinvent the wheel around data, identity and social arena as this promotes easy interoperability.

Data Decomposition

In the cloud world its key to understood reading and writing from the single storage has its limitation, there is no defined limit on the number of concurrent connection to sql azure but there is high chance too many connection can lead to throttle. Most architect tend to give too much importance on the application and service layer from a scale unit stand point of view but kind of forget database also many need some kind of partitioning i.e horizontal, vertical etc..

Apply functional composition to database layer too.

- Don’t force partitioning for the sake of partitioning this will impact manageability.

- Partition where when required to reduce dependency, independent management and scale,

Reduce logic in SQL Database

- CRUD is acceptable.

Latency Shifts

Latency is cuts across 2 areas internal server to server OR device to service. Latency has to be built into design.

References

1.http://www.windowsazure.com/en-us/develop/net/architecture/

No comments:

Post a Comment