My last post had concentrated on Service Bus Queues, I do get a lot of questions from the customer when to use Azure Queues vs. Service Bus Queues. This post I try to establish the decision which help one make better choices between the two.

Digging deeper into Azure Queues: Windows Azure Queue the expectation is it will be lower cost alternative to the Service Bus Queue’s. In principle Windows Azure Queue going ahead referred to as WAQ

- Are asynchronous reliable delivery messaging construct.

- Highly available , durable and performance efficient. The performance numbers to which the WAQ can manage is area of some research.

- Ideally they are process At Least Once.

- REST based interface support.

- WAQ doesn’t have a limit on the number of messages stored in queue.

- TTL for WAQ is 1 week , post that they will be garbage collected.

- Meta data support in form name value pair exists

- Maximum message size is 64KB

- Message inside WAQ can be put in binary when read back it comes as XML

- No guaranty on sequencing of the message

- No support for duplicate messages identification.

- Parameters of WAQ include

- MessageID: GUID

- Visibility Timeout: Default is 30 seconds maximum is 2 hours, Ideally use for read and process and then issue a delete.

- PopReceipt: On reading the queue there is visibility timeout associated with it, the receiver reads the messages tries to complete some processing and then may decide to issue a delete. The message which is read has a PopReceipt associated with it. PopReceipt is used while issuing a delete it goes with the MessageId.

PopReceipt, is

- Property of CloudQueueMessage

- Set every time a message is popped from the queue (GetMessage or GetMessages)

- Used to identify the last consumer to pop the message

- A valid pop receipt is required to delete a message

- An exception is thrown if an invalid pop receipt is passed

- PopReceipt is used in conjuction with the message id to issue a Delete of a message , for which a visibility timeout is set. We have the following scenarios

A Delete is issued within the visibility timeout the Delete the message is deleted from the queue, the assumption here is the message has been read and processing required has been done term it the happy path.

A Delete is issued post expiry of the visibility time, this assumed to be exception flow “ ex: the receiver process has crashed” and message is available in queue for re-processing. This failure recovery process rarely happens, and it is there for your protection. But it can lead to a message being picked up more than once. Each message has a property, DequeueCount, that tells you how many times this message has been picked up for processing. For example above, when receiver A first received the message, the dequeuecount would be 0. When receiver B picked up the message, after server A’s tardiness, the dequeuecount would be 1. This becomes a strategy to detect problem or poison message and route it to a log,repair and resubmit process.

- Poison message is a message that is somehow continually failing to be processed correctly. This is usually caused by some data in the contents that causes the processing code to fail. Since the processing fails, the messages timeout expires and it reappears on the queue. The repair and resubmit process is sometimes a queue that is managed by a system management software. There is a need to check for and set a threshold for this dequeuecount for messages.

- MessageTTL : This specifies the time-to-live interval for the message, in seconds. The maximum time-to-live allowed is 7 days. If this parameter is omitted, the default time-to-live is 7 days. If a message is not deleted from a queue within its time-to-live, then it will be garbage collected and deleted by the storage sytem.

Notes: It is important to note that all queue names must be lower case. The CreateIfNotExist() method will see if the queue really does exist in Windows Azure, and if it doesn’t it will create it for you.

Comparison of Azure Queues with Service Queues

A good post which covers that can be found here -http://preps2.wordpress.com/2011/09/17/comparison-of-windows-azure-storage-queues-and-service-bus-queues/

Design Consideration for Azure Queues

The messages are pushed into the queues the receiver will read the message process & delete. The general technique for reading messages from a queue used is Polling. The use of a classic queue listener with a polling mechanism may not be the optimal choice when using Windows Azure queues because the Windows Azure pricing model measures storage transactions in terms of application requests performed against the queue, regardless of if the queue is empty or not. If the number of messages increase in the queue “load leveling” will kick in and more receivers roles will spin off. These receivers will continue to run and accrue cost.

The costing of a single queue listener using polling mechanism

Assuming a hypothetical situation there is a single queue listener constantly polling for messages in the queue. The business transaction data arrives at regular intervals. However, let’s assume

- The solution is busy processing workload just 25% of the time during a standard 8-hour business day.

- That results in 6 hours (8 hours * 75%) of “idle time” when there may not be any transactions coming through the system.

- Furthermore, the solution will not receive any data at all during the 16 non-business hours every day.

Total Idle time= 22 hours, there is dequeue work i.e GetMessage() called from Polling function that amounts

22 hrs X 60 min X 60 transaction/min – assuming polling at 1 second= 79,200 transaction/day

Cost of 100,000 transactions = $0.01

The storage transactions generated by a single dequeue thread in the above scenario will add approximately = 79,200 / 100,000 * $0.01 * 30 days = $0.238/ month for 1 queue listener in polling mode.

Architects will not plan for a single queue listener for the entire application and chances are number queue listeners will be high & there are going to different queues for different requirements. I’m assuming a total 200 queues used in an application with polling

200 queues X $0.238 $45. 720 per month - is the cost incurred when the solution was not performing any computations at all, just checking on the queues to see if any work items are available

Addressing The Polling Hell…

To address the polling hell following techniques can be used

- Back off polling, a method to lessen the number of transactions in your queue and therefore reduce the bandwidth used. A good implementation can be found here http://www.wadewegner.com/2012/04/simple-capped-exponential-back-off-for-queues/

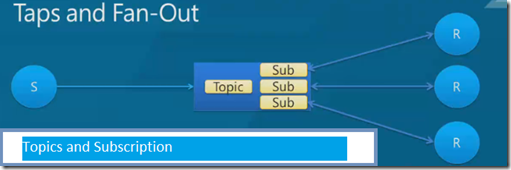

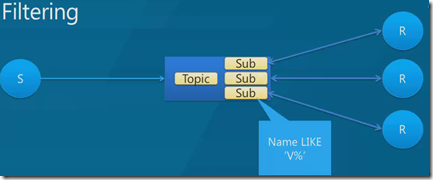

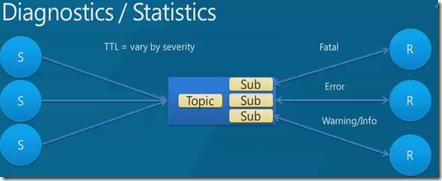

- Triggering (push-based model): A listener subscribes to an event that is triggered (either by the publisher itself or by a queue service manager) whenever a message arrives on a queue. The listener in turn can initiate message processing thus not having to poll the queue in order to determine whether or not any new work is available. The implementation specifics of a Push Based Model is made easier with introduction of internal IP addresses for roles. An internal endpoint in the Windows Azure roles is essentially the internal IP address automatically assigned to a role instance by the Windows Azure fabric. This IP address along with a dynamically allocated port creates an endpoint that is only accessible from within a hosting datacenter with some further visibility restrictions. Once registered in the service configuration, the internal endpoint can be used for spinning off a WCF service host in order to make a communication contract accessible by the other role instances. A Publish Subscriber implementation based on this straightforward. The limitations of this approach are.

- Internal endpoints must be defined ahead of time – these are registered in the service definition and locked down at design time; In case the endpoints where dynamic a small registry can be implemented for the same purpose.

- The discoverability of internal endpoints is limited to a given deployment – the role environment doesn’t have explicit knowledge of all other internal endpoints exposed by other Azure hosted services;

- Internal endpoints are not reachable across hosted service deployments – this could render itself as a limiting factor when developing a cloud application that needs to exchange data with other cloud services deployed in a separate hosted service environment even if it’s affinitized to the same datacenter;

- Internal endpoints are only visible within the same datacenter environment – a complex cloud solution that takes advantage of a true geo-distributed deployment model cannot rely on internal endpoints for cross-datacenter communication;

- The event relay via internal endpoints cannot scale as the number of participants grows – the internal endpoints are only useful when the number of participating role instances is limited and with underlying messaging pattern still being a point-to-point connection, the role instances cannot take advantage of the multicast messaging via internal endpoints.

Note: Given that application is not a large scale application spreading across geo location the pub sub model can still be implemented using the above approach. The limitation hit hard in large scale geo distributed applications. In case we are to look at a large scale geo distributed application the idea would be go for service bus.

- Look at Service Bus Queues as alternative after a complete cost analysis as the Pub Sub implementation on Service Bus is out of box.

Dynamic Scaling

Dynamic scaling is the technical capability of a given solution to adapt to fluctuating workloads by increasing and reducing working capacity and processing power at runtime. The Windows Azure platform natively supports dynamic scaling through the provisioning of a distributed computing infrastructure on which compute hours can be purchased as needed.

It is important to differentiate between the following 2 types of dynamic scaling on the Windows Azure platform:

- Role instance scaling refers to adding and removing additional web or worker role instances to handle the point-in-time workload. This often includes changing the instance count in the service configuration. Increasing the instance count will cause Windows Azure runtime to start new instances whereas decreasing the instance count will in turn cause it to shut down running instances. It takes 10 minutes to add a new instance.

- Process (thread) scaling refers to maintaining sufficient capacity in terms of processing threads in a given role instance by tuning the number of threads up and down depending on the current workload.

Dynamic scaling in a queue-based messaging solution would attract a combination of the following general recommendations:

- Monitor key performance indicators including CPU utilization, queue depth, response times and message processing latency.

- Dynamically increase or decrease the number of role instances to cope with the spikes in workload, either predictable or unpredictable.

- Programmatically expand and trim down the number of processing threads to adapt to variable load conditions handled by a given role instance.

- Partition and process fine-grained workloads concurrently using the Task Parallel Library in the .NET Framework 4.

- Maintain a viable capacity in solutions with highly volatile workload in anticipation of sudden spikes to be able to handle them without the overhead of setting up additional instances.

Note: To implement a dynamic scaling capability, consider the use of the Microsoft Enterprise Library Autoscaling Application Block that enables automatic scaling behavior in the solutions running on Windows Azure. The Autoscaling Application Block provides all of the functionality needed to define and monitor autoscaling in a Windows Azure application. It covers the latency impact, storage transaction costs and dynamic scale requirements.

Additional Consideration for Queues

HTTP 503 Server Busy on Queue Operations

At present, the scalability target for a single Windows Azure queue is “constrained” at 500 transactions/sec. If an application attempts to exceed this target, for example, through performing queue operations from multiple role instance running hundreds of dequeue threads, it may result in HTTP 503 “Server Busy” response from the storage service. I have found Transient Fault Handling Application Block pretty handy in retry mechanism - http://msdn.microsoft.com/en-us/library/hh680905(v=pandp.50).aspx

Important References

- Understanding Windows Azure Storage Billing – Bandwidth, Transactions, and Capacity post on the Windows Azure Storage team blog.

- Queue Read/Write Throughput study published by eXtreme Computing Group at Microsoft Research.

- The Transient Fault Handling Framework for Azure Storage, Service Bus & Windows Azure SQL Database project on the MSDN Code Gallery.

- The Autoscaling Application Block in the MSDN library.

- Windows Azure Storage Transaction - Unveiling the Unforeseen Cost and Tips to Cost Effective Usage post on Wely Lau’s blog.