A walk into an organization IT department & we find a large number of applications which support the business of the organization and are critical by nature & the not so critical ones, we often get into this debate with the customer I’m really not ready to move the complete stack into cloud I’d prefer a fair mix or private & public cloud. The customer may come back telling I only want to use the compute of the public cloud in certain peak situations. This discussion is acceptable as this is a real problem and so what we have as a solution the hybrid cloud. Hybrid cloud is a mix of both we yet have to get to clear boundaries of how much is the mix.

Getting Definition of Hybrid Cloud hopefully Correct….

So by definition what do I mean by hybrid cloud? As defined by NIST…

Hybrid cloud. The cloud infrastructure is a composition of two or more distinct cloud infrastructures (private, community, or public) that remain unique entities, but are bound together by standardized or proprietary technology that enables data and application portability (e.g., cloud bursting for load balancing between clouds).

Some real disruptive thinking………………………

Using two sets of criteria to define cloud deployment models roots inconsistency and ambiguity.

As defined in SP 800-145, a hybrid cloud is a composition of infrastructures, yet at the same time a private cloud and a public cloud are defined according to their intended audiences. The change of criteria in classifying a hybrid cloud roots inconsistency and ambiguity in the deployment models presented in SP 800-145. Forming a concept with two sets of criteria is simply a confusing way to describe an already very confusing subject like cloud computing.

"Hybrid cloud" is an ambiguous, confusing, and frequently misused term.

A hybrid cloud is a composition of two or more distinct cloud infrastructures (private, community, or public) as stated in SP 800-145. That is to say that a hybrid cloud can be a composition of private/private, private/community, private/public, etc. From a consumer’s point of view, they are in essence a private cloud, a private cloud, and a public or private cloud respectively. Regardless how a hybrid cloud is constructed, if it is intended for public consumption it is a public cloud, and if for a particular group of people it is then a private cloud according to SP 800-145. Essentially the composition of clouds is still a cloud and it is an either public or private cloud, and cannot be both at the same time.

For many enterprises IT professionals, a hybrid cloud means an on-premise private cloud connected with some off-premise resources. Notice these off-premise resources are not necessary in reality a cloud. In such case, it is simply a private cloud with some extended boundaries. A cloud is a set of capabilities and must be referenced in the context of the delivered application. Just placing a VM in the cloud or referencing a database placed in the cloud does not make the VM or the database itself a public cloud application.

The key is that a hybrid cloud is a derived concept of clouds. Namely, a hybrid can be integrations, modifications, extensions, or a combination of all of cloud infrastructures. A hybrid is nevertheless not a new concept or a different deployment model and should not be classified as a unique deployment model in addition to the two essential ones, i.e. the public and private cloud models. A cloud is either public or private and there isn’t a third kind of cloud deployment model based on the intended users.

“Hybrid cloud” is perhaps a great catchy marketing term. For many, a hybrid seems to suggest it is advanced, leading edge, and magical, and therefore better and preferred. The truth is "hybrid cloud" is an ambiguous, confusing, and frequently misused term. It confuses people, interjects noises into a conversation, and only to further confirm the state of confusion and inability to clearly understand what cloud computing is.

Hybrid Cloud 101….

Needed a basic 101 on hybrid , this a hybrid cloud video by VMWare , not that it mandates VMWare knowledge the idea is get the concept clear

.

Cloud like any other IT trends needs a long term vision and short term adaptive strategy which changes based on the market requirements, not to mention the long term vision continues to guide the overall direction. The hybrid cloud is a reality and does fit into the long term vision however the short term strategy for the same is skewed with each using the hybrid space as a sales pitch example MSFT , Amazon & VMWare.

Understanding the Hybrid Cloud Play……

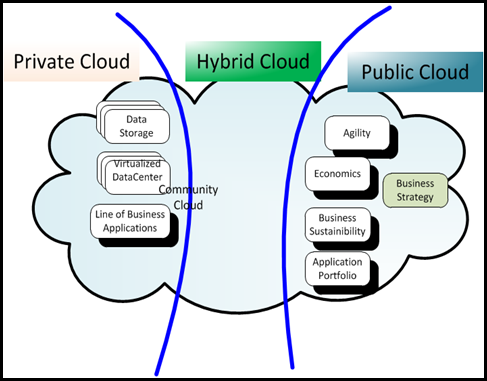

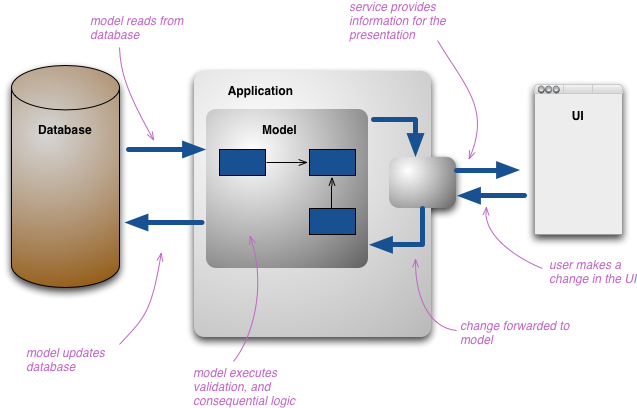

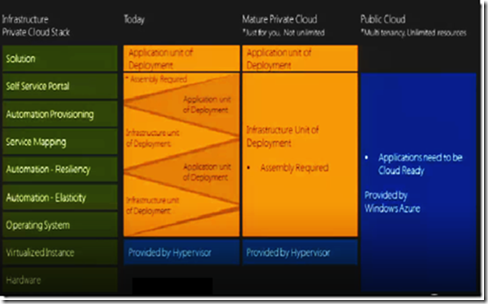

Referring to the diagram above most organization start of there Private Cloud experiment with virtualization and first step is virtualized data center, next to come into the Private Cloud is the line of business application and finally data storage.

Community Cloud is sharing of private cloud within /between organizations.

The Public Cloud pretty much breathes the NIST guidelines wont get too much into that what we are trying to focus on the strategy of hybrid and the public cloud picture is drawn in retrospect to the same. The key element in the Public Cloud is the business strategy (in conjunction with hybrid cloud only).

Hybrid Cloud is the entire picture inclusive of the public & the private cloud.

Precision Questioning for the Hybrid Cloud…

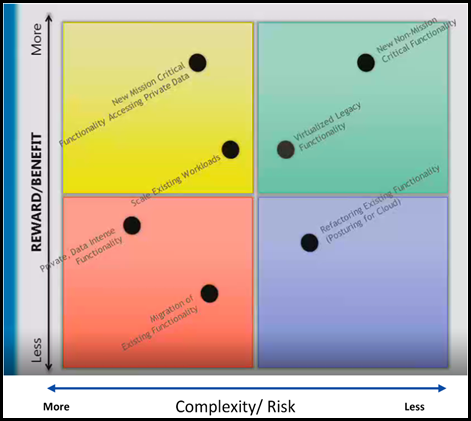

Getting past the definition & 101 phase the next question pops up what and when to use Hybrid Cloud. Precision questioning can help you navigate the Hybrid Cloud discussion better.

1. What are the New Non Mission Critical Functionality that are planned to be built in next 2 years: This is a very good candidate for the cloud its new functionality required can be a candidate for PAAS or SAAS depending on the nature of the workload.This is typically a low risk low returns item can be tackled very easily. Driving the value proposition in this query is not difficult.

2. Can some applications directly benefits by virtualization? This can be a quick turn around for the question “maximizing the use of the resources”, However this does not bring down the overall cost of operation whether public or private. This typically is a low hanger.

3.. What are the New Mission Critical Functionality/Accessing Private Data that are planned to be built in next 2 years? ex: Payroll System. This conversation can be fragile and this will must probably be driven into the private cloud of the organization. This is high return and low risk if ported on private cloud as most of the phobic queries are directly addressed “we are in private cloud”.

4. Do you see any of your application which may need to scale on an event or some specific time period of the year? ex: tax filing applications. The idea here is use the cloud to scale the existing workload.

5. Refactoring Existing Functionality , this is where the existing ESB is taken and made cloud ready. This is a difficult conversation to have with the customer it completely depends of ESB’s state of readiness to move to cloud. Depending on how the discussion with customer goes this most probably will turn out to be a low priority.

6. They Final Key Question “How much of existing private data would want to be moved to the cloud”. This is high risk and very high return item. What is max which can work here is a private cloud.

7. “Migration of Existing Functionality i.e existing line of business application to the cloud”.

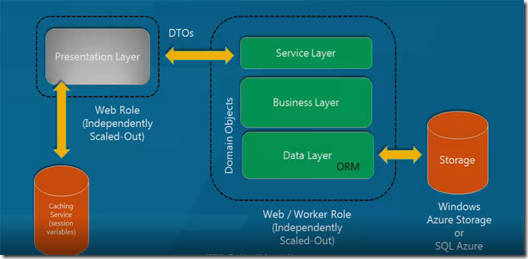

Architecting Solutions that span Private & Public Clouds

- On Premises IT with Off Premises Cloud.

- On Premise IT with Multiple Off Premises Cloud

- Cloud Striping

- Distributing Community Cloud

- Lift and Shift

- Batch at Scale/ Bursting

- Images in CDN

- Adding Odata

- Shopping Cart

- Compliance

- Partner + PaaS

- Cloud + Optimized

- Outsourcing

- Synchronization

- Scaling and Caching

- Pull back

- Big Data

- Consumerization

Research Speak……

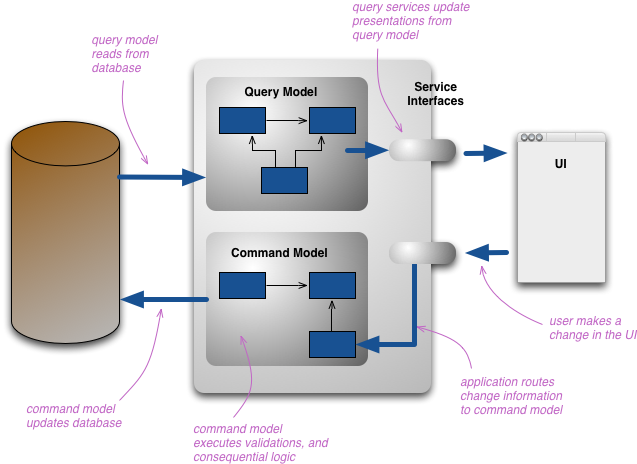

NIST & Gartner have come out with some models on the hybrid cloud. NIST seems to be well defined and easily understand in terms of what they mean Hybrid.

Below is the NIST definition represented.

The Gartner definition revolves around what they call is Hybrid IT which acts a broker and switches between internal and external cloud.

The Gartner Definition is very sketchy and no clear guidelines. The IT organization acting as a broker is a very noble concept but putting into practice the actual implementation will call for a very different conversation as we look at the overall Hybrid IT strategy from an true implementation point of view.

The Gartner report can be found here https://skydrive.live.com/redir.aspx?cid=b4c4034e55e4a63f&resid=B4C4034E55E4A63F!598&parid=root.

Implementation excerpt from the Gartner Report…..

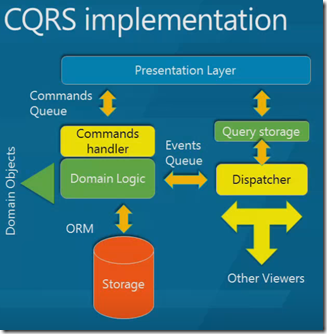

Hybrid IT is likely to rely on hybrid clouds. Hybrid clouds are a connection or integration between two clouds — usually between an internal private cloud and an external public cloud. Hybrid clouds are constructed by using software or hardware appliances that enable applications and data to more easily migrate among connected clouds. For example, many applications are dependent on identity management systems to authenticate users or to consume terabytes of data, or they have

deterministic input/output (I/O) latency requirements. These dependencies often prevent applications from migrating to the external cloud. Hybrid cloud solutions solve each of these dependencies in unique ways.

Essentially, two types of hybrid clouds exist:

■ Service interface-based: The service interface-based hybrid cloud utilizes an appliance to present a list of cloud services to the end user (i.e., the cloud consumer). When the user selects a cloud service, the appliance redirects the user to an internal or external cloud service based on the consumer's identity.

■ Infrastructure-based: The infrastructure-based hybrid cloud is essentially a software or appliance bridge designed to augment internal IT resources and integrate two clouds by connecting the back-end infrastructure of an internal cloud to one or more external cloud services.

Closing Comments…….

By definition no one has built a pure hybrid cloud component, taking an example of large multinational what they have an on premise and off premise kind of IT infrastructure which kind of complements the Gartner story revisiting the definition this what comes to my mind.

“An integrated infrastructure whose unique assets, although separated by well defined boundaries are connected via a standardized proprietary technology to broker data and application interoperability in order to optimize computing resources, increase shareholder values and reduce risks”.

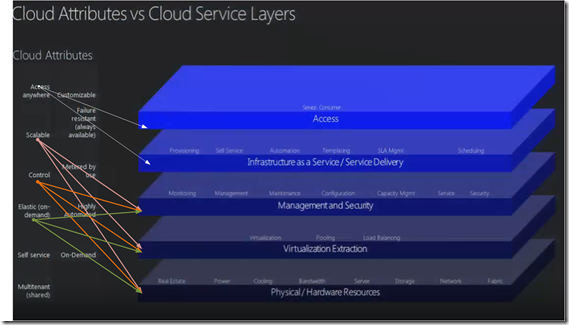

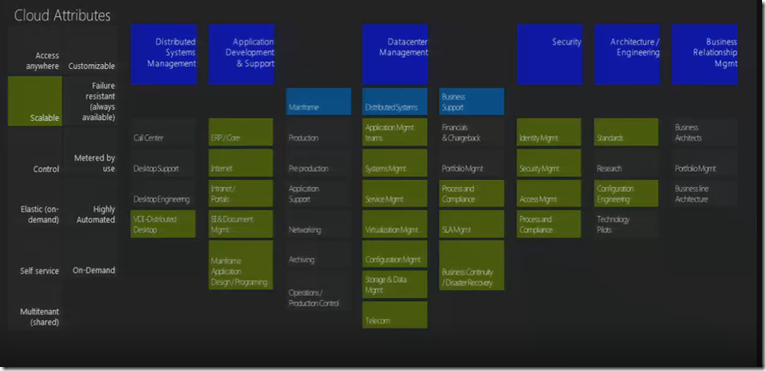

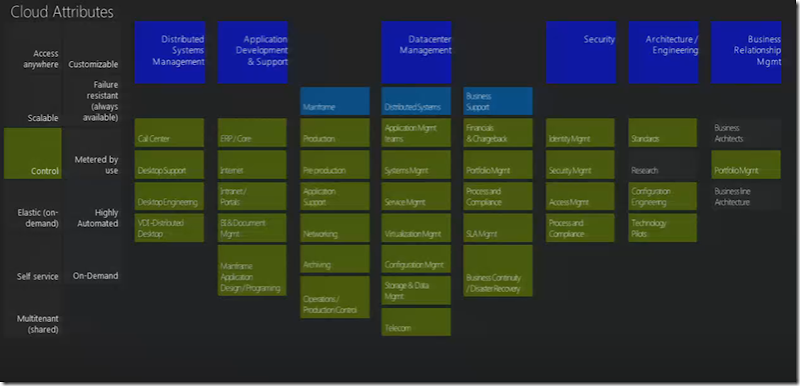

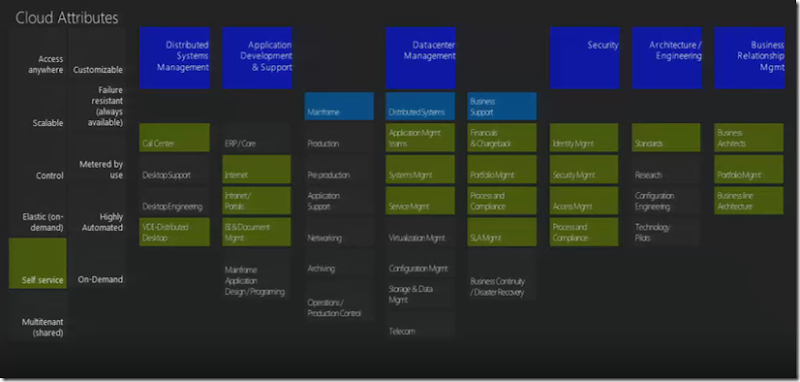

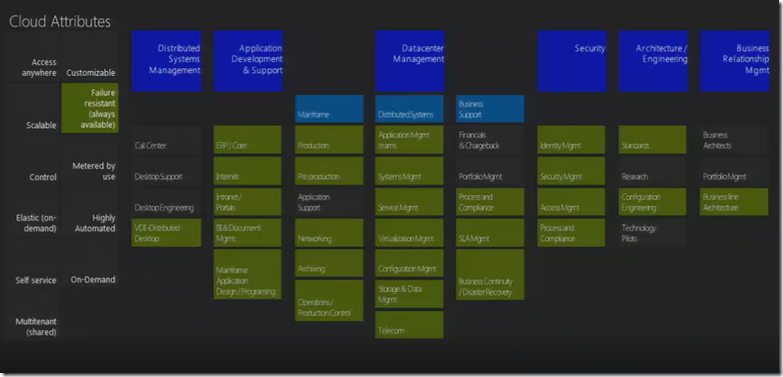

Hybrid Cloud More detailed post coming next …… Applying cloud attributes based on NIST for Hybrid Cloud is something what I plan to write next.